Think|Stack's very own, Technical Service Delivery Manager, Jake Jones did a really cool thing! He created an EC2 module for Terraform. Now, if that sparked your interest, you're in for a treat. Here is quick tutorial on how Jake created an EC2 instance with Cloudwatch Alarm Metrics using Terraform.

If you want to see the repository it is located in 👉 click here..

This module will do a few things:

- Create an EC2 Instance

- Automatically look up the latest Windows Server 2019 AMI for the EC2 instance.

- Create and attach a additional drive.

- Create a Cloudwatch Alarm Metric to monitor CPU.

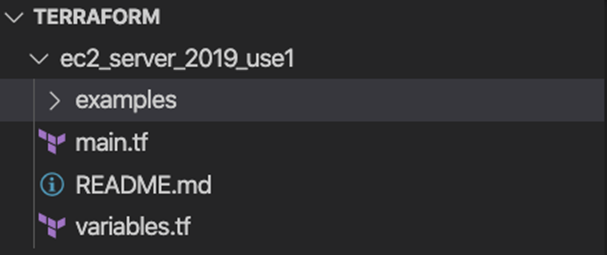

The folder structure looks like this:

First things first… I created the main.tf file which contains all of my configuration except for the variables and outputs.

The main.tf has a few parts to it.

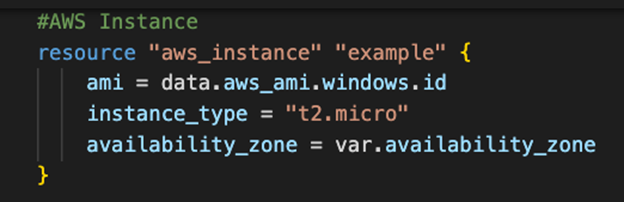

AWS Instance Code

The first section is the instance resource code:

#AWS Instance

resource "aws_instance" "example" {

ami = data.aws_ami.windows.id

instance_type = "t2.micro"

availability_zone = var.availability_zone

lifecycle {

ignore_changes = [ami]

}

}

You will notice a few things here.

- The instance type is set in the module to t2.micro

- availability zone is set using a variable

- ami is set using data

- lifecycle is set to ignore ami changes (use this if you don’t want your instance to recreate when the ami updates)

We will get the the availability zone piece in just a bit, first we are going to tackle the data used for the ami argument.

Data for AMI Using a Filter

The next bit of code for the filter looks like this:

#AMI Filter for Windows Server 2019 Base

data "aws_ami" "windows" {

most_recent = true

filter {

name = "name"

values = ["Windows_Server-2019-English-Full-Base-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

owners = ["801119661308"] # Canonical

}

The argument most_recent is set to true. This means that it will grab the most recent AMI that fits the criteria that we specify in our filter.

Next you will notice that in the name we set the value to Windows_Server-2019-English-Full-Base-* with the star at the end. This lets Terraform know we don’t care about what text comes after that point and it was done because the standard format puts the date there. If we set the date the ami was created and set the most_recent argument to true it would not do us any good.

After that we set the virtualization-type to hvm. I am not going to go into a lot of detail here. Just know this is a good idea and do some additional research on hvm vs pv.

Last we set owners to 801119661308.

Now I am sure you are asking… how the heck do I actually get this information? Well you are going to have to run a quick command with the AWS cli.

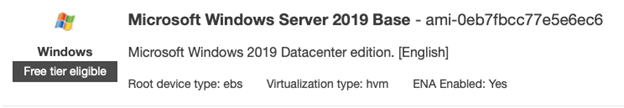

First, login to AWS and get the ami you want to grab the information for.

Here is an example:

If you click on launch instance you can do a search.

After that you want to copy the ami id and run this command

aws ec2 describe-images --owners amazon --image-ids ami-0eb7fbcc77e5e6ec6

Make sure you replace my ami id with your own.

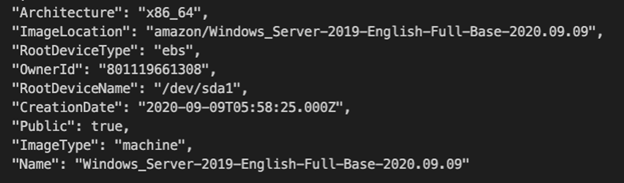

After running the command there will be a lot of output. In the last block you will see something that looks like this:

Here you can see the Name and the Owner ID (which we use for ‘owner’). You can copy these values to use in your own AMI filter!

Now, you can also see what I was talking about with the date. At the end of the Name you can see that the date is used. Make sure you remove that and add a * if you want the most recent ami to always be used.

EBS Volume and Attachment

Alrighty, now it is time to tackle the EBS volume and attachment. The code is going to look like this

resource "aws_ebs_volume" "example" {

availability_zone = var.availability_zone

size = 40

}

resource "aws_volume_attachment" "ebs_att" {

device_name = "/dev/sdh"

volume_id = aws_ebs_volume.example.id

instance_id = aws_instance.example.id

}

For the availability_zone argument we use the variable here again. The reason for this is to make sure our instance and ebs volume end up in the same AZ. You can also see the size set just below.

Below that you can see the attachment where the volume_id is set to aws_ebs_volume.example.id this resource address basically says look for an aws ebs volume named example and grab the id. If you look back up at our ebs volume code you can see it is named example.

Last you can see instance_id which is set to aws_instance.example.id again using resource addressing to point to our instance inside of the module. (see screenshot below for a reminder)

Now it is on to the last piece of this! The Cloudwatch Alarm Metric!

Cloudwatch Alarm Metric

We are almost at the end of the config for the main.tf file with our metric. The code looks like this:

resource "aws_cloudwatch_metric_alarm" "ec2_cpu" {

alarm_name = "cpu-utilization"

comparison_operator = "GreaterThanOrEqualToThreshold"

evaluation_periods = "2"

metric_name = "CPUUtilization"

namespace = "AWS/EC2"

period = "120" #seconds

statistic = "Average"

threshold = "80"

alarm_description = "This metric monitors ec2 cpu utilization"

insufficient_data_actions = []

dimensions = {

InstanceId = aws_instance.example.id

}

}

For this one we are going to have a cloudwatch alarm metric that looks for average CPU to exceed 80% in 2 evaluation periods that last 120 seconds each. If you want more detail on this one there is a ton of documentation on it 👉 here.

The biggest thing to note here is the dimensions where we specify we want to use InstanceId then we use resource addressing to point back to our instance which we want to have the alarm set for.

Finally, we are at the configuration of our variables.

Variable Configuration

For our variable we just have a really simple configuration in a file called variables.tf.

variable "availability_zone" {

type = string

default = "us-east-1a"

}

You can set the default to whatever works best for you.

Conclusion

That’s it! You can now upload this to github or keep it local and call your module using just a few lines of code!

Call it from some github location.

provider "aws" {

region = "us-east-1"

}

module "ec2" {

source = "github.com/somerepo/"

}

Call it from some local location.

provider "aws" {

region = "us-east-1"

}

module "ec2" {

source = "../some-directory/"

}

Heck, maybe even specify your availability zone.

module "ec2_instance" {

source = "github.com/jakeasarus/terraform/ec2_server_2019_use1/"

availability_zone = var.availability_zone

#make sure to add availability_zone to variables if you do this

}

.jpg?width=175&name=TS%20-%20Transform%20Protect%20logo(mini).jpg) WE TRANSFORM & PROTECT

WE TRANSFORM & PROTECT

We Transform & Protect by putting People Before Technology. We are a Managed Service Provider focused on cybersecurity and cloud solutions that support digital transformations. We believe that the technology your business relies on should be used to drive transformation and lead to a seamless user experience. In uncertain times it’s important to partner with people and companies you can trust. Think|Stack was built to handle the unpredictable, to help those who weren’t.

If you’re unsure what to do next or if you have questions about your technology, our Think|Stack tribe is here to help, contact us anytime.

About the Author

Jake Jones

IT professional since 2013 with experience in Hashicorp Terraform, AWS, cloud migrations, and a life-long student. Technology is something I am greatly passionate about and I enjoy nothing more than tinkering with a new tool, idea, or service. After spending so much time learning, I have decided it is time to give back to the technology community and begin teaching here on Udemy!

.png)

.png)